The New Zealand Government recently released its inaugural AI Strategy, framed as “Investing with Confidence”, along with a voluntary “Responsible AI Guidance” for businesses. The strategy takes a light-touch, principles-based approach, aligning with OECD AI Principles and relying on existing legal frameworks.

In this environment, AI technologists can and will continue to cannibalise news services in the absence of robust regulation or ethical enforcement, potentially leading to even more journalism job losses and news organisation closures.

In the absence of any regulations, here’s the tactical playbook for AI-driven news aggregation in an unregulated media economy. And news organisations should be very worried. Because much of it might sound very familiar to legacy media.

Executive Summary

In a global media landscape stripped of strong regulation and where unethical tactics are often rewarded, technologists are free to build the next generation of AI-driven news aggregation platforms which systematically cannibalise traditional news services, regardless of paywalls or journalistic protections.

The playbook mirrors past moves by Big Tech, notably Google's unregulated ascent as the global index of journalism. Today's advances in LLMs and generative AI present a new arsenal of tools, or weapons, to replicate, summarise, and ultimately replace journalism at scale without ever employing a journalist.

This analysis explores the tactical blueprint for such cannibalisation, the anticipated resistance from legacy newsrooms, and the mechanisms by which these obstacles can be neutralised or circumvented in a profit-maximising, regulation-light environment.

Tactical Playbook: How tech cannibalises news

1. Scrape First, Monetise Later

Technologists can begin by mass-scraping news content from across the open web, including headlines, summaries, and snippets, using crawler infrastructure disguised as user agents or friendly bots. Paywalled sites can be parsed using proxy tools, cached mirrors, or third-party aggregators.

Google News indexed snippets under the guise of “fair use,” monetising clicks without sharing ad revenue.

2. LLM-Driven Paraphrasing and Summarisation

Using large language models, scraped content can be instantly rewritten, summarised, or restructured to avoid accusations of plagiarism. The output appears novel, even if the underlying journalism has merely been repackaged. Models can strip out paywalled attribution entirely.

Attribution is not technically required if the AI produces “original” text, making detection and litigation extremely difficult.

3. Synthetic Personalisation

By leveraging user data (search, clickstream, location), the aggregator tailors rewritten news to individual preferences, appearing more “useful” than the original source. This undermines the editorial role of human journalists as public-interest filters.

A key tactic is to add micro-commentary or “AI insights” to give the illusion of value-add while exploiting journalist-created content.

4. Incentivised Contribution and Community Input

Borrowing from Reddit or YouTube, platforms can crowdsource news links or commentary, creating a feedback loop where user data becomes part of training corpora. The AI learns how to mimic editorial judgment from users.

For example, Google uses human search behaviour to optimise ranking and featured snippet selection, without hiring editors.

5. Shadow Syndication

The AI system can be trained to identify high-quality journalism, extract its key insights, and rewrite the material into press-release-style “original” stories. These can be published under a generic byline and redistributed via APIs or newsletters, simulating a wire service.

A monetisation model would offer the API as a backend “news engine” to startups, governments, or influencers needing cheap content.

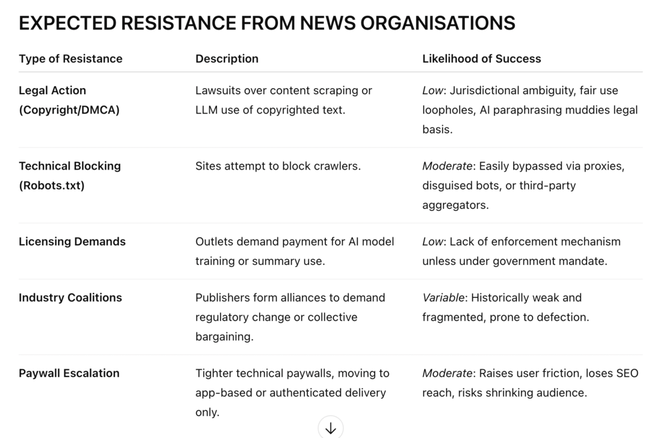

How tech companies overcome resistance

1. Legibility Obfuscation

Make it impossible to trace the origin of AI-generated news summaries. Abstract the language enough that detection tools fail, ensuring plausible deniability.

2. Jurisdiction Shopping

Headquarter in territories with weak copyright enforcement or AI oversight. This neutralises most legal threats from Western news organisations.

3. Offer Backchannel Deals

Quietly pay a few key outlets or give them traffic boosts in exchange for silence or API access. This fractures coalitions and prevents collective resistance.

4. Leverage Regulatory Capture

Influence digital regulation by framing AI aggregation as “democratising information” or “fighting misinformation.” Position traditional media as gatekeepers of elitist knowledge.

Language to use: “Open access,” “anti-censorship,” “AI for good.”

5. Exploit the Collapse of Local News

Target markets where journalism has already weakened (e.g., local papers in rural areas). Provide AI-generated summaries of council meetings or police reports, claiming public value.

This creates the illusion of filling a civic gap while displacing real journalism.

The Underlying Environment: The Incentives To Cheat

The current digital era is era defined by:

Low public trust in media,

Declining news subscriptions,

Cheap compute and vast AI models,

Regulatory vacuum

investor pressure to scale at all costs,

This presents strong structural incentives for technologists to behave unethically. The market rewards scale, speed, and stickiness, qualities easily achieved through parasitic extraction of journalistic work.

Journalism, with its labor-intensive costs and ethical overhead, cannot compete under these dynamics without radical intervention.

Conclusion

AI technologists can and will cannibalise news services in the absence of robust regulation or ethical enforcement. By exploiting grey areas in copyright law, paraphrasing content, leveraging personalisation algorithms, and strategically undermining publisher resistance, these systems can create a façade of original news while hollowing out the foundations of journalism.

This trend, left unchecked, points toward an information ecosystem where the appearance of journalism persists but the journalistic function dies.